Quantum Computing

What is Quantum Computing?

Quantum computing, an emerging field of computer science, utilizes the principles of quantum mechanics to tackle problems beyond the capabilities of even the most powerful conventional computers. This technology, still under development, promises to solve complex issues currently unsolvable or too time-consuming for supercomputers. By leveraging quantum physics, future quantum computers could process incredibly complex calculations exponentially faster, potentially reducing tasks that would take classical computers thousands of years to mere minutes.

Quantum mechanics, the study of subatomic particles, reveals the fundamental laws that quantum computers use to perform probabilistic calculations. Four key quantum mechanical principles are crucial to understanding this: superposition (a particle existing in multiple states simultaneously), entanglement (correlated behavior of multiple particles), decoherence (the decay of quantum states into classical states), and interference (interaction of entangled states affecting probabilities).

Unlike classical computers using bits (0s and 1s), quantum computers use qubits, which can exist in a superposition of both 0 and 1. This allows qubits to store and process significantly more information; the computational power scales exponentially with each additional qubit. However, each qubit ultimately outputs only a single bit of information. Quantum algorithms manipulate information in ways inaccessible to classical computers, offering potential speed advantages for specific problems.

As classical computing approaches material limitations in processing power, quantum computing offers a potential solution for certain complex tasks. With major companies and startups investing heavily in this technology, the quantum computing industry is projected to reach $1.3 trillion by 2035.

How do Quantum Computing work?

A primary difference between classical and quantum computers is that quantum computers use qubits instead of bits to store exponentially more information. While quantum computing does use binary code, qubits process information differently from classical computers. But what are qubits and where do they come from?

What are Qubits?

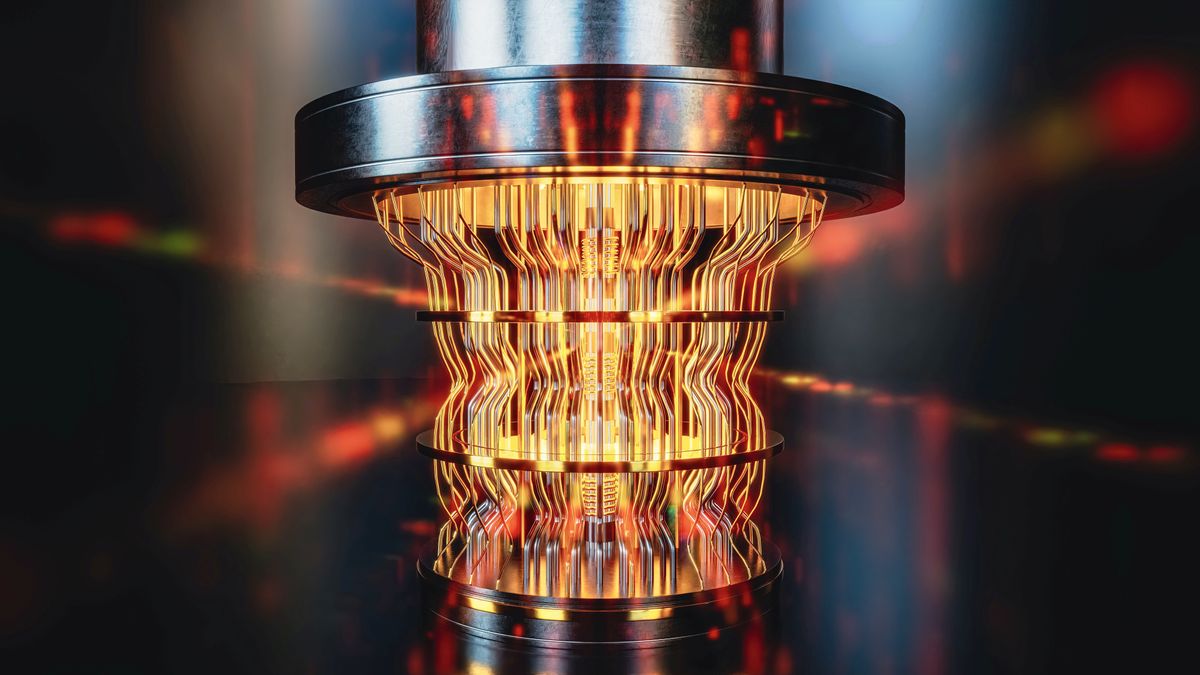

Qubits, the fundamental units of quantum information, are created by manipulating quantum particles like photons, electrons, trapped ions, and atoms, or by engineering systems that mimic their behavior, such as superconducting circuits. These particles require extremely cold temperatures to minimize interference and prevent errors caused by decoherence.

Various types of qubits exist, each with strengths for different tasks. Common types include: superconducting qubits (known for speed and control), trapped ion qubits (known for long coherence and precise measurements), quantum dots (promising scalability and compatibility with current technology), photons (used for long-distance quantum communication), and neutral atoms (suitable for scaling and operations).

Unlike classical bits, which struggle with large amounts of data in complex problems like factoring, qubits leverage superposition. This allows quantum computers to approach problems differently. Imagine escaping a maze: a classical computer tries each path individually, while a quantum computer uses qubits to explore multiple possibilities simultaneously, using quantum interference to find the solution. More precisely, quantum computers measure the probability amplitudes of qubits, which act like interfering waves. This interference eliminates incorrect solutions, leaving the correct one.

Key principles of Quantum Computing

Quantum mechanics, the foundation of quantum computing, operates differently than classical physics, with quantum particle behavior often appearing strange and counterintuitive. Describing these behaviors is challenging, as conventional understanding lacks the necessary framework. Key concepts for grasping quantum computing include superposition, entanglement, decoherence, and interference.

A single qubit, while basic, becomes powerful when placed in superposition—a combination of all its possible states. Groups of qubits in superposition create complex computational spaces, allowing complex problems to be represented in new ways and granting quantum computers inherent parallelism for simultaneous processing.

Entanglement links qubits, so measuring one instantly reveals information about others in the entangled system. However, measurement causes the system’s superposition to collapse into a classical binary state (0 or 1). This collapse from a quantum to a classical state, known as decoherence, can be intentional (through measurement) or unintentional (due to environmental factors), and allows quantum computers to interface with classical systems.

Entangled qubits in superposition behave like waves with amplitudes representing outcome probabilities. These waves can reinforce (constructive interference) or cancel each other out (destructive interference), altering the likelihood of specific outcomes.

In essence, quantum computing leverages two seemingly contradictory principles: the random behavior of qubits in superposition and the correlated behavior of entangled qubits, even when separated. A quantum computation begins by preparing a superposition of states. A user-defined quantum circuit then creates entanglement, leading to interference between these states according to a specific algorithm. This interference amplifies the probabilities of correct solutions while suppressing incorrect ones.

Classical Computing vs Quantum Computing

Quantum computing leverages the principles of quantum mechanics, which govern the subatomic world and differ significantly from classical physics. Although all systems are fundamentally quantum at the subatomic level, classical computers don’t fully exploit these quantum properties in their calculations. Quantum computers, however, capitalize on these properties to perform computations beyond the reach of even the most powerful conventional machines.

What is a Classical computer?

Classical computers, from early punch-card systems to modern supercomputers, operate similarly, processing information sequentially using binary bits (0s or 1s). These bits, combined into binary code and manipulated with logic operations, enable computers to execute a wide range of tasks.

What is a Quantum computer?

In contrast, quantum computers use qubits, which, unlike bits, can exist in a superposition of both 0 and 1. This superposition, combined with quantum entanglement (where multiple qubits are linked), allows quantum computers to process data in a fundamentally different way.

The key differences are: classical computers use bits and sequential processing, while quantum computers use qubits, superposition, and parallel processing based on quantum logic and interference. Instead of calculating each step sequentially, quantum circuits process vast datasets simultaneously, significantly increasing efficiency for certain complex problems. Quantum computers are probabilistic, providing a range of likely solutions, whereas classical computers are deterministic, yielding a single, definite answer. While this probabilistic nature might seem less precise, it offers enormous speed advantages for highly complex calculations.

Although quantum computers won’t replace classical computers for most everyday tasks, they excel at specific problems involving large datasets or complex calculations like prime factoring. The development of cloud-connected quantum computers and hybrid systems is already exploring advanced applications, promising to revolutionize existing industries and potentially create entirely new ones.